Contents

- Risking the software organization

- Working dangerously

- Necessary, but inadequate steps toward risk reduction

- Manual analysis

- Debugging

- Code Review

- Dynamic analysis

- Test

- Stomping problems at the source

- Increasing effectiveness with fewer resources

- Brushing out problems

- Team linting

- Long-term protection

- Historical lint

- Using lint tools today

- Analysis Report

- Call Tree Report with include-file trees

- Cross-reference Report

- Increasing competitive viability

- About the Author

- Resources

|

Introduction

"The longer a software bug goes undetected the more costly it is to identify and eradicate." In spite of the near-adage status of this statement, too many software development organizations are failing to adopt best practices and to implement available tools that can easily eradicate software problems before their associated risks and costs escalate. The end result is that the overwhelming majority of development organizations seem to be risking their existence by taking more time than necessary to ship buggy products.

This article identifies the risks associated with latent software problems, reviews the alternatives development organizations might consider to reduce such risk, discusses the means by which developers can eliminate problems earlier in development, and presents the history, use, and benefits of using a static source code analysis tool to eliminate problems at the source.

|

Risking the software organization

"The longer a software bug goes undetected the more costly it is to identify and eradicate."

This statement has become almost an adage for software developers. It's accepted almost without question because developers live daily with the associated risks of latent problems in software.

Software problems identified after compiling an application can halt development and take hundreds of developer hours to identify and correct -- with the solution often causing additional problems. Problems that remain hidden until users find them not only cause customers inconvenience and expense, they can also result in PR nightmares that threaten the developer's profitability. If a magazine reviewer identifies a serious problem, not only will the company's reputation be threatened, the success of the product might be at risk.

Working dangerously

Regardless of the risks, research shows a seeming disconnect between accepting the adage and acting on it. In fact, many software development organizations are failing to implement available tools for eradicating software problems and improving development processes, according to the 2001 Software Industry Survey conducted by Cleanscape Software International.

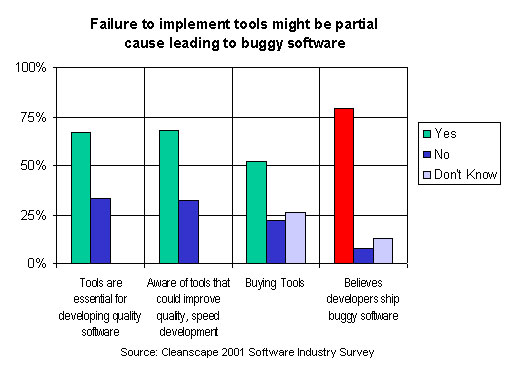

While 67% of software development professionals surveyed believe software tools are essential for developing quality software and 68% said they were aware of tools that could help speed development while increasing product quality, only 52% said their organizations had plans to buy such development tools. This disconnect is likely a strong factor influencing why 79% of the developers surveyed said they believe software development companies ship buggy software.

In other words, there seems to be too many software development organizations that are failing to adopt best practices and to implement available tools that can easily eradicate software problems before their associated risks and costs escalate. The end result is that the overwhelming majority of development organizations seem to be risking their existence by taking more time than necessary to ship buggy products. (See Chart 1)

Chart 1

|

Caption: While 67% of software development professionals surveyed believe software tools are essential for developing quality software and 68% said they were aware of tools that could help speed development while increasing product quality, only 52% said their organizations had plans to buy such development tools. This disconnect is likely a strong factor influencing why 79% of the developers surveyed said they believe software development companies ship buggy software. |

|

Necessary, but inadequate steps toward risk reduction

Acting on the adage to reduce the risks associated with latent problems in software requires introducing a step in the development process to help developers identify and eliminate problems as early as possible - before the costs and risks of eradication escalate.

Common bug eradication practices include manual analysis, debugging, code review, dynamic analysis, and test. While necessary, these steps can be inadequate, time consuming, and expensive.

Manual analysis

The most rudimentary method by which programmers check code for problems is on screen or by printing the code and checking the printout for problems. Debugging by printf might be sufficient for expert programmers working on simple applications, but it forces the organization to rely entirely on the skills of the programmer and might provide an insufficient documentation trail. This leaves the code highly subject to human error and can be difficult for managers to control. The more complex the code, the more programmer-hours it can take to conduct a sufficient manual check, and the less likely the analysis will identify problems that can eventually result in significant expense or risk to the organization.

Debugging

In the quest to kill bugs a typical development team might alternatively build, run, and debug a program hundreds of times during development - returning to coding when a problem is identified. Manual debugging is a time-consuming process that often accounts for more than 50% of overall development time. Part of the reason for this resource-intensive cycle is that typical compilers simply can't recognize literally hundreds of coding problems that can lurk as latent bugs.

Code Review

A code review can be an effective means by which teams can identify whether code meets local standards, and might even result in identifying some problems prior to compiling. However, while important, the value of a code review can be limited by the following reasons:

- It can be an inefficient use of expensive resources.

- Increases possibility for inconsistency of process and result across the team, from project to project, and throughout the organization.

- Provides limited documentation trail for programmers and management.

- Human error passes through the compiler.

Dynamic analysis

Dynamic analysis attempts to find errors while a program is executing. The objective of dynamic analysis is to reduce debugging time by automatically pinpointing and explaining errors as they occur.

The use of dynamic analysis tools can reduce the need for the developer to recreate the precise conditions under which an error occurs. However, a bug that is identified at execution might be far removed from the original programmer and still must be returned to coding for correction.

Test

Test teams attempt to determine whether an executed software application matches its specification and that executes in its intended environment. Common steps in the test phase of development include: modeling the software environment, selecting test scenarios, running and evaluating test scenarios, and measuring testing progress.

While testing can enhance a development teams ability to identify and eradicate problems, adequately testing today's complex software systems requires enterprise-class test automation tools, with associated infrastructure and trained staff. This translates into more expensive resources to identify problems that are becoming further removed from their source. Also, waiting for the testing stage to identify problems can be a costly mistake because standard test tools typically operate only on runtime units, or executables, and problems usually must be returned to coding for correction.

|

Stomping problems at the source

Increasing effectiveness with fewer resources

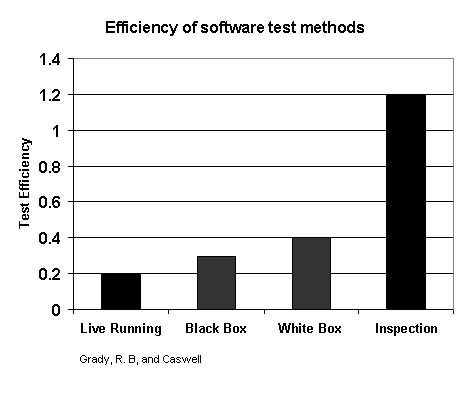

Even if a software development team is fully implementing code reviews, debugging, dynamic analysis, and testing it can greatly enhance software problem identification and eradication efforts by automatically identifying problems at their source-in the code prior to compiling or execution. This can be done with a static source code analyzer. Not only is static source code analysis 400% more effective than dynamic testing (see Chart 2), it helps speed development, save costs, reduce resources and risks associated with other problem eradication efforts, and increases product quality by automatically performing functions that can otherwise take hundreds of programming hours, like the following:

- Check source files for errors

- Identify and correct coding problems

- Isolate obscure problems

- Map out unfamiliar programs

- Enforce programming standards

- Compute customized quantitative indicators of code size, complexity, and density

- Generate detailed reports on the condition and structure of the code

- Document the code review process

In short, static source code analysis reduces resources required for other problem eradication tactics and tools by helping the programmer eliminate hundreds of problems at their source.

Chart 2

|

|

Caption: In "Software Metrics: Establishing a Company-Wide Program" (Prentice-Hall) Grady and Caswell showed that "static testing is four times more effective than dynamic testing".

|

Brushing out problems

Also known as lint tools, static source code analyzers can help programmers to more easily identify and correct problems that often pass through a compiler, like:

- Syntax errors

- Inconsistencies in common block declarations

- Redundancies

- Unused functions, subroutines, and variables

- Inappropriate arguments passed to functions

- Non-portable usage

- Noncompliance with codified style standards

- Type usage conflicts across different subprograms/program units

- Variables that are referenced but not set

- Maintenance problems

Team linting

A static source code analyzer is not limited to only enhancing a programmers ability to identify and eradicate problems in code; it can also offer process and performance enhancements throughout the software development team. A team leader can use a static source code analyzer to identify problems in groups and modules, while QA managers can use it to verify the integrity of an entire package. Project managers can use a static source code analyzer to help establish and enforce coding standards, and to establish quality control measures.

Long-term protection

In addition to the immediate returns possible by automating the process of identifying problems at the coding stage of software development, an organization can derive significant long-term benefits from static source code analysis.

A software product must be maintained by an organization throughout its entire life cycle, while employees can come and go. When a problem is identified and the coder is on another project or no longer with the company - or simply separated by time from the original coding process - the organization typically must relearn what was originally done, or even duplicate the original coding step to correct the problem.

By automating the analysis and documentation of the code, the organization will always have a record of what was done and will be able to more easily identify problems without duplicating previous efforts. The messages produced by a static analyzer can also help prevent code drift by identifying questionable coding practices.

In short, automated static source code analysis significantly reduces potential expenses from repetitive processes.

|

Historical lint

Yesterday

Toadies commercial lint tools are the progeny of a free lint utility that was released with Unix. Unix's lint was originally developed to help ensure consistency of function calls across boundaries. While the proper use of ANSI can help solve this problem today, most other sources of errors in C code remain, including the following: uninitialized variables, order of evaluation dependencies, loss of precision, potential uses of the null pointer, consistency problems, and programmer error. The Unix lint utility was also difficult to use and has never been fully utilized by Unix programmers. However, in the early 1980's Cleanscape Software International (then IPT) took the idea of a static source code analyzer and enhanced it to produce advanced static source code analysis tools for Fortran and C that could provide extensive source code analysis.

Today

Today, static analysis tools are available for most programming languages and should be considered a basic utility in any programmers toolbox. Basic linting capabilities have even started appearing in compilers, but these features still tend to pail in comparison to today;s dedicated static analyzers.

Tomorrow, literally

The future of lint tools could very well be in the Internet. Recently introduced web-delivered static analysis tools like Cleanscape LintPlus Online (See Chart 3) and Cleanscape FortranLint Online promise to provide developers with the bug-stomping power of leading static analysis tools with the convenience, ease-of-use, and cost-savings of a web-delivered application.

> See web-based lint tool for C at http://www.cleanscape.net/products/lintonline/login.html

|

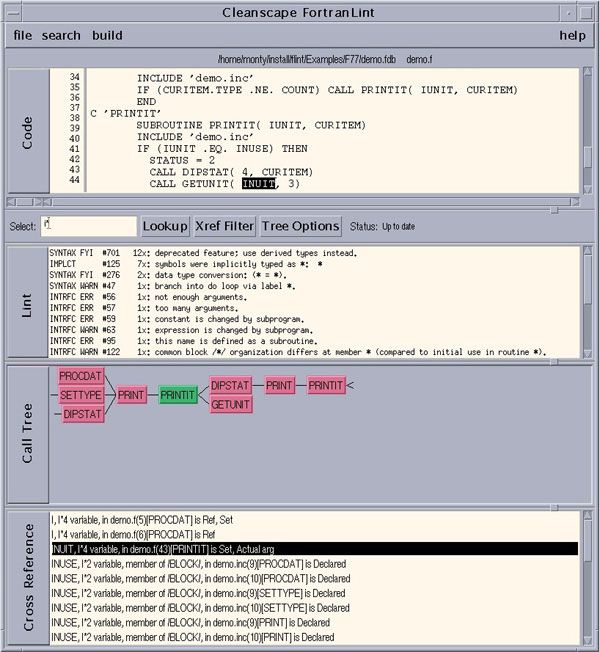

Using lint tools today

In addition to providing feature sets that go far beyond the original lint and what typical compilers offer, today's static source code analysis utilities are far easier to use. Good lint utilities have broken down static source code analysis into a few easy steps. The user selects reporting and analysis options, and points the utility at the source code files he wants to analyze (See Chart 4).

In a matter of seconds, the utility conducts an intense comprehensive analysis on the code and its structure and generates reports according to the options selected by the user. Basic reports that might be provided include:

- Analysis report

- Call tree report with include-file tree

- Cross-reference report

Chart 4

|

|

Caption: Web-delivered lint tools promise to give developers the bug-stomping power of leading lint tools with the convenience, ease-of-use and cost-savings of the Internet.

This screen shot of Cleanscape FortranLint shows how a static analysis tool will conduct an intense comprehensive analysis on the code and its structure and generate reports that help the programmer eliminate problems, understand and document the structure of the code, verify the integrity of the package, and adhere to quality control measures.

> See web-based lint tool at http://www.cleanscape.net/products/lintonline/login.html

|

Analysis report

An analysis report typically summarizes all of the problems found by the lint utility, including: syntax errors, global interface problems, warnings, unused locals, unused results, portability problems, strict prototype problems, unusual constructs, and others. A source listing option will show the errors in the context of the actual code, and might provide live links back to the original source code so the programmer can make quick changes and analyze the code again. The summary report might include statistics for include function counts, I/O counts, and error summaries. It might also include suggestions for correcting problems.

Call tree report with include-file tree

A Call Tree report will typically show the calling structure of the analyzed code and identify areas where the structure is improperly formatted or broken. A Call Tree Report might also contain an include-file trees report that graphically shows the nesting structure of the “include” files used by the input code. A graphical depiction of the calling structure of source code can be particularly helpful for a programmer who needs to become quickly familiar with legacy code during transition and upgrade projects. It is also helpful for helping new project members become familiar with code from an existing project.

Cross-reference report

A Call Tree report will typically show the calling structure of the analyzed code and identify areas where the structure is improperly formatted or broken. A Call Tree Report might also contain an include-file trees report that graphically shows the nesting structure of the "include" files used by the input code. A graphical depiction of the calling structure of source code can be particularly helpful for a programmer who needs to become quickly familiar with legacy code during transition and upgrade projects. It is also helpful for helping new project members become familiar with code from an existing project.

|

Increasing competitive viability

While a static source code analysis tool will not eliminate the need for code reviews, compilers, debuggers, testing, or dynamic analysis, it can greatly reduce the overhead on these more expensive resources by catching problems early and allowing programmers to correct problems at the source while they are most familiar with their code.

Identifying problems at the source level with a static source code analysis tool shortens the development cycle, prevents project delays that result from post-compile testing, and reduces costs by eliminating problems with cheaper resources earlier in the development process. In the long haul, by helping to eliminate problems at the source, static source code analysis increases the competitive viability of the software development organization by inversing the adage previously introduced:

"The sooner a software bug is eradicated, the greater and faster the potential return on investment for a software project."

|

About the Author About the Author

Brent Duncan is director of marketing for Cleanscape Software International, where he is responsible for development and implementation of worldwide integrated marketing and product strategies. As an executive and strategic management consultant Mr. Duncan has built and directed integrated marketing and cross-functional development teams for Honeywell SE, Westinghouse Security Electronics, and Auspex Systems. Mr. Duncan holds a Masters in Organizational Management from University of Phoenix and a Bachelors in Communications and Public Relations from BYU. He also teaches strategic management and marketing communications as an adjunct professor at University of Phoenix.

Mr. Duncan can be contacted at: [email protected].

|

Resources

- Marilyn Bush, "Improving Software Quality: The Use of Formal Inspections at the Jet Propulsion Laboratory" Proceedings of the 12th International Conference on Software Engineering, IEEE Computer Society Press

- Reg Clemens, Ph.D. Physics. "White Paper: A LINT for Fortran Programs". http://www.cleanscape.net/stdprod/flint/flint_review.html

- Compuware, "Accelerating Development of Multi-language Components and Applications for the Enterprise and Internet," White Paper.

- Brent Duncan, "Cleanscape 2001 Software Tools Survey", Cleanscape Software International.

- Louis A. Franz and Jonathan C. Shih, "Estimating the Value of Inspections and Early Testing for Software Projects" Hewlett Packard Journal.

- R. B. Grady and D. L. Caswell, "Software Metrics: Establishing a Company-Wide Program," Prentice-Hall, 1987

- Steve Maguire, "Writing Solid Code". Microsoft Press. 1993

- Tom Parker and Glenn Wright, "White Paper: Using Fortran Source Code Analysis at NCAR" http://www.cleanscape.net/stdprod/flint/flintcase.html

- Karl Wiegers, "Personal Process Improvement: You don't need an official sanction to tune up your own engineering savvy." "Software Development Magazine". May 2000

|

|